Documentation > Deployment > Supported Benchmarks

Note: These benchmark implementations will only run on H-Store and VoltDB. Many of them have been ported to our other project, OLTP-Bench, that supports JDBC-based DBMSs.

Telecom One (tm1)

| Specification: | Version 1.0 |

| Source Code: | /src/benchmarks/edu/brown/benchmark/tm1 |

| Number of Tables: | 4 |

| Number of Procedures: | 9 |

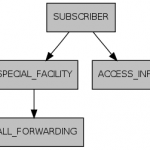

The TM1 benchmark is a newer OLTP testing application that simulates a typical caller location system used by telecommunication providers. The benchmarks consists of four tables, three of which are foreign key descendants of the root SUBSCRIBER table. All procedures reference tuples using either SUBSCRIBER‘s primary key or a separate unique identification string. Those stored procedures that are given this primary key in their input parameters are always single-partitioned, since they can be immediately directed to the proper partition. Other procedures that only provide the non-primary key identifier have to broadcast queries to all partitions in order to discover the partition that contains the SUBSCRIBER record that corresponds to this separate identification string.

Note: The TM1 benchmark has since been renamed to TATP.

TPC-C (tpcc)

| Specification: | Version 5.10 |

| Source Code: | /src/benchmarks/org/voltdb/benchmark/tpcc |

| Number of Tables: | 9 |

| Number of Procedures: | 5 |

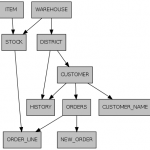

The TPC-C benchmark is the current industry standard for evaluating the performance of OLTP systems. It consists of nine tables and five stored procedures that simulate a warehouse-centric order processing application. All of the stored procedures in TPC-C provide a warehouse id as an input parameter for the transaction, which is the ancestral foreign key for all tables except ITEM. Approximately 90% of the ORDER records can be processed using single-partition transactions because all of the corresponding ORDER_LINE records are derived from the same warehouse as the order. The other 10% transactions have queries that must be re-routed to the partition with the remote warehouse fragment.

AuctionMark (auctionmark)

| Specification: | Version 1.0 |

| Source Code: | /src/benchmarks/edu/brown/benchmark/auctionmark |

| Number of Tables: | 16 |

| Number of Procedures: | 14 |

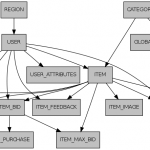

AuctionMark is a new OLTP benchmark being developed by Brown University and a well-known online auction web site. The benchmark is specifically designed to model the workload characteristics of an online auction site running on a shared-nothing parallel database. It consists of 16 tables and 14 stored procedures, including one procedure that is executed at a regular interval to process recently ended auctions.

SEATS (seats)

| Specification: | – |

| Source Code: | /src/benchmarks/edu/brown/benchmark/seats |

| Number of Tables: | 8 |

| Number of Procedures: | 6 |

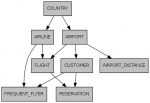

The Stonebraker Electronic Airline Ticketing System benchmark models an online ticketing service. Customers search for flights, make reservations, and update frequent flyer information. Still a work in progress.

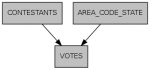

Voter Benchmark (voter)

| Specification: | https://community.voltdb.com/node/47 |

| Source Code: | /src/benchmarks/edu/brown/benchmark/voter |

| Number of Tables: | 3 |

| Number of Procedures: | 1 |

The Voter workload is derived from the software system that powers the Japanese version of the “American Idol”

talent show. Users call in to vote on their favorite contestant during a talent show, which invokes a new transaction that updates the total number of votes for each contestant. The DBMS records the number of votes made by each user based on their phone number; each user is only allowed to vote a fixed number of times. A separate transaction is periodically invoked in order to display the vote totals while the show is broadcast live. This benchmark is designed to saturate the DBMS with many short-lived transactions that all update a small number of records. It was originally ported from VoltDB.

Bingo Benchmark (bingo)

| Specification: | – |

| Source Code: | /src/benchmarks/org/voltdb/benchmark/bingo |

| Number of Tables: | 3 |

| Number of Procedures: | 4 |

A simple benchmark that models a faux-bingo hall. Originally from VoltDB.

Wikipedia Benchmark (wikipedia)

| Specification: | – |

| Source Code: | /src/benchmarks/edu/brown/benchmark/wikipedia |

| Number of Tables: | 11 |

| Number of Procedures: | 8 |

This workload is based on the popular on-line encyclopedia. Since the website’s underlying software, MediaWiki, is open-source, we are able to use the real schema, transactions, and queries as used be the live website. This benchmark’s workload is derived from (1) data dumps, (2) statistical information on the read/write ratios, and (3) front-end access patterns and several personal email communications with the Wikipedia administrators. We extracted and modeled the most common operations in Wikipedia for article and “watchlist” management. These two operation categories account for over 99% of the actual workload executed on Wikipedia’s underlying DBMS cluster. This benchmark is useful for stress-testing many “corner cases” of a system, such as large tuple sizes. Furthermore, the combination of a large database (including large secondary indexes), a complex schema, and the use of transactions makes this benchmark invaluable to test novel indexing, caching, and partitioning strategies.

Originally from OLTP-Bench Project.

YCSB (ycsb)

| Specification: | – |

| Source Code: | /src/benchmarks/edu/brown/benchmark/ycsb |

| Number of Tables: | 1 |

| Number of Procedures: | 1 |

A port of the Yahoo! Cloud Serving Benchmark. This version is from another port from OLTP-Bench Project.

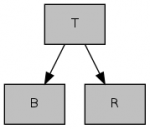

Locality Benchmark (locality)

| Specification: | – |

| Source Code: | /src/benchmarks/edu/brown/benchmark/locality |

| Number of Tables: | 2 |

| Number of Procedures: | 2 |

The Locality benchmark is a micro-benchmark used to measure the overheard of multi-partition data access.

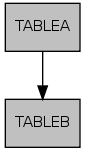

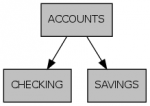

SmallBank (smallbank)

| Specification: | Original Paper |

| Source Code: | /src/benchmarks/edu/brown/benchmark/smallbank |

| Number of Tables: | 3 |

| Number of Procedures: | 6 |

This workload models a banking application where transactions perform simple read and update operations on their checking and savings accounts. All of the transactions involve a small number of tuples. The transactions’ access patterns are skewed such that a small number of accounts receive most of the requests. We also extended the original SmallBank implementation to include an additional transaction that transfers money from one customer’s account to another. This benchmark was originally written by Mohammad Alomari and Michael Cahill.

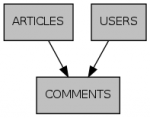

Articles (articles)

| Specification: | – |

| Source Code: | /src/benchmarks/edu/brown/benchmark/articles |

| Number of Tables: | 3 |

| Number of Procedures: | 4 |

This workload simulates an on-line news aggregation site where users post content and others comment on them. All transactions in this benchmark are single-partitioned.

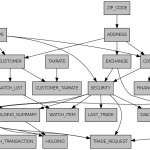

TPC-E (tpce) Incomplete

| Specification: | Version 0.32.2g |

| Source Code: | /src/benchmarks/edu/brown/benchmark/tpce |

| Number of Tables: | 33 |

| Number of Procedures: | 12 |

Since TPC-C is 20 years old, the TPC-E benchmark was developed to represent more modern OLTP applications. The TPC-E schema contains 33 tables with a diverse number of foreign key dependencies between them. It also features 12 stored procedures, of which ten are executed in the regular transactional mix and two that are considered “clean-up” procedures that are invoked at fixed intervals. These clean-up procedures are of particular interest because they perform full-table scans and updates for a wide variety of tables.